Ensuring responsible AI in the public sector

As governments worldwide integrate AI into decision-making, the question is no longer whether to use AI, but how to do so responsibly.

From predictive analytics in law enforcement to chatbots for citizen engagement, we have seen how AI can reshape the way governments interact with citizens and manage resources. Additionally, EY has supported government to consider the use of AI-driven tools for fraud detection, resource allocation, and crisis management, enabling governments to respond more effectively to challenges.

As AI continues to evolve, its integration into the public sector brings opportunity and risk. In February 2024 the Irish Government published Interim Guidelines for the Use of AI in the Public Service – these guidelines are currently being reviewed and updated, and further guidance is expected to be published soon.

The 2024 Guidelines focused on seven key requirements for the responsible use of AI in the public service and these areas are likely to remain central to the updated guidelines. Government has made a commitment that AI tools used in the civil and public service will comply with seven key requirements:

- human agency and oversight;

- technical robustness and safety;

- privacy and data governance;

- transparency;

- diversity, non-discrimination and fairness;

- societal and environmental well-being; and

- accountability.

While AI tools can assist human capabilities, the Government guidelines are very clear that they should never replace human oversight. All AI tools used in the public service must be part of a process that has human oversight built into the process.

There are a number of important steps that should be followed to support the responsible use of AI in the Irish Government sector. These include:

1. Develop a responsible AI governance framework: To ensure that AI usage complies with the guidelines, it is essential that an initial risk assessment is completed to consider the legal, moral and societal impact of the proposed development. This assessment should be the first step in the development process and align to a documented “Responsible Governance Framework” that includes each of the seven areas set out in the guidelines. The risk assessment should also address any challenges around AI and set out how the development demonstrates individual and societal benefits.

The absence of a Responsible Governance Framework can give rise to a lack of accountability, increases the risk of a bias in the data of AI models and may undermine the prospect of AI uses for good. The Responsible Governance Framework should recognise ethical, moral, legal, cultural, and socioeconomic implications and drive a human centred, trusted, accountable, and interpretable AI system.

2. Establish ongoing oversight and accountability: The development of AI-based systems should include regular and ongoing processes to monitor accountability and oversight. It is not sufficient to consider the guiding principles of accountability and oversight at the start of any project. There should be consistent performance monitoring and regular audits of the decision-making process against pre-defined metrics – thereby making certain that the performance of the system is consistent and maintains accountability. A key element of the monitoring process should ensure that appropriate steps are taken to test for accuracy and bias. Robust monitoring is necessary to confirm that data sets represents the diversity of potential end users in real world conditions.

3. Ensure legal and regulatory compliance: Key requirements include the GDPR and the EU AI Act. It is important to ensure that any data used in an AI model complies with GDPR requirements. Under GDPR, permission must be sought to use personally identifiable information. This includes facial images and voice. The EU AI Act began a phased implementation in August 2024 and requires any AI development to be categorised by risk (unacceptable, high, limited, and minimal) and imposes stricter requirements for higher-risk applications. The development of high-risk AI must ensure transparency, robustness, and traceability, conduct risk assessments, and maintain human oversight. The Act also mandates regular monitoring and reporting of AI systems’ performance and security.

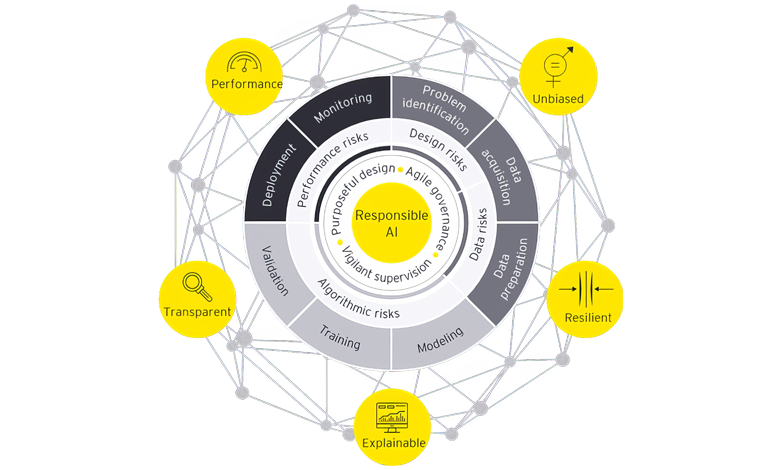

EY’s Responsible AI framework

Recognising the importance of developing AI in a responsible and compliant way, EY has developed a Responsible AI framework that can help to evaluate AI risk and build controls across trust attributes, risk categories and governance domains.

The framework is designed to ensure the following:

• Performance: That AI’s outcomes are aligned with stakeholder expectations and perform at a desired level of precision and consistency.

• Unbiased: Inherent biases arising from the development team composition, data and training methods are identified and addressed through the AI design. The AI system is designed with consideration for the needs of impacted stakeholders and to promote a positive societal impact.

• Transparent: When interacting with AI, an end user is given appropriate notification and an opportunity to select their level of interaction. User consent is obtained, as required for data captured and used.

• Resilient: The data used by the AI system components and the algorithm itself is secured from unauthorised access, corruption and/or adversarial attack.

• Expandable: The AI’s training methods and decision criteria can be understood, are ethical, documented and readily available for human operator challenge and validation.

Conclusion

There is no doubt that AI can bring enormous opportunity for the public sector, improved citizen experience, greater access to services, personalised citizen solutions increased productivity and increased levels of data driven policy making to name but a few. However, as we are reminded by the old Dutch saying “trust arrives on foot and leaves on horseback”, trust takes time to build, but is quickly lost, effort is required to build AI solutions in a responsible and trusted way.

W: www.ey.com/en_ie