High Performance Computing: dense, hot and power hungry

Specialist computing hardware enables new capabilities and opportunities but comes with some challenging hosting requirements. Niall Wilson from the Irish Centre for High End Computing explains.

The history of High Performance Computing (HPC) is as much a history of exotic data centre technology as it is of arcane processors, expensive networks and large storage devices. Indeed, the original Cray-1 dating from 1976 and featuring an elaborate Freon cooling system was half-jokingly said by its creator Seymour Cray to have represented an even greater plumbing challenge than it did an electronic engineering one. HPC has since emerged from being a niche tool primarily used in academic and military research to being pervasive across a vast expanse of human endeavour. The techniques and technology of HPC are now widespread in fields as diverse as the financial services, automotive manufacturing, energy production, medical science and obviously big data. However, a consequence of this enormous computational capability is the demanding requirements it places on data centre hosting and in particular the ability to handle very high heat densities. Consideration and planning for such infrastructure needs to be accommodated in future data centre builds.

HPC differentiates itself by prioritising computation above all else so that the fastest processors are used in large numbers and paired with the fastest networks, memory and storage systems in an attempt to keep these processors working flat out as much as possible. Ease of programming is often sacrificed in pursuit of performance with developers adopting novel technologies such as graphical processing units (GPUs) in cases where these technologies have the potential to offer a big step up in computational capability. In short, cutting edge HPC technologies can enable larger or more difficult problems to be tackled. These advances often then trickle down to more mainstream computing, for example GPUs were being used in HPC applications at least five years prior to their current widespread adoption for artificial intelligence tasks.

The scale of the systems at the forefront of HPC are enormous; for example, the US Department of Energy sponsored Exascale Computing Project aims to produce an exaflop system by 2021 which will be 50 times as powerful as the largest system in the world currently and will consume 20-30MW of electrical power. This highlights again the difficulty of hosting HPC infrastructure: they consume an awful lot of power and (very efficiently) convert it into heat. As with a lot of IT technology, the cooling requirements for HPC supercomputers seem to have come full circle and having passed through an era of using regular air cooled servers ten years ago, the current requirements are for increasingly specialised cooling.

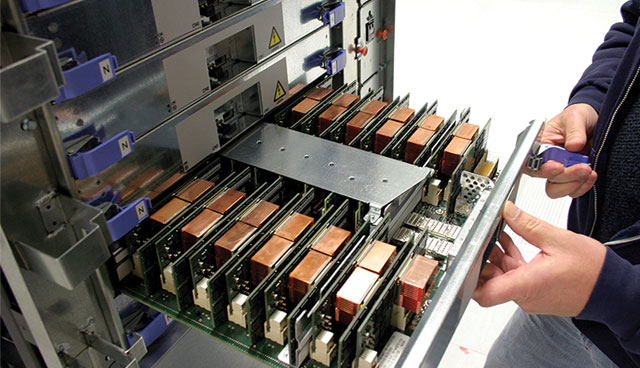

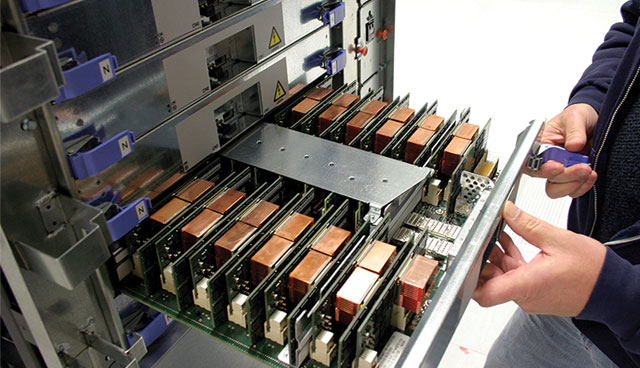

The Irish Centre for High End Computing (ICHEC) is Ireland’s national HPC centre which provides advanced technical solutions and infrastructure to academia, industry and the public sector. Throughout its 12-year history, ICHEC has managed a diverse range of HPC systems and has often been faced with the challenge of finding datacentre hosting for this infrastructure. ICHEC’s current HPC system (which is approaching four years old) fits 30kW in a single rack and requires water cooled heat exchangers inside the racks to cool the hot exhaust air. This is about eight times more than traditional ICT servers, which explains the shortage in affordable ‘HPC-friendly’ datacentres in Ireland.

Hosting such equipment is not cheap. Over its lifetime (2005-2008) Ireland’s first national supercomputer ‘Walton’ cost more in datacentre charges than its initial capital acquisition cost. Even now that ICHEC has partnered with academic institutions to lower this cost, a significant fraction of the budget for the national HPC service is spent to cover electricity costs alone.

Still, current systems being deployed have increased this requirement further yet. 50kW per rack is now typical and at this point air is no longer a suitable medium to remove heat and so water or another liquid is passed through pipes or cold plates directly attached to CPUs and other heat generating components to transfer away the heat. Other novel techniques such as immersing components in proprietary phase change liquids or even mineral oil are also in use. While current HPC system are using more and more power to run them, one saving grace is that the new direct cooling techniques are much more energy efficient than older airflow methods and so the cost overhead for cooling can be as low as 5 per cent of the IT power costs (compared to 100 per cent in older traditional DCs).

“The lack of affordable data centre hosting for high density HPC equipment has represented a significant challenge for ICHEC for over a decade. But it is not just us, a high availability, high density datacentre is also something numerous other public sector agencies have expressed a need for and I would like to explore strategic partnerships with any group of organisations interested in joining us in making the case for the creation of such a facility.” JC Desplat, ICHEC Director

Many of the new datacentre developments in Ireland are being built for and by the big public cloud providers which reflects the increasing level of migration of enterprise computing to public cloud platforms. Doing HPC in the cloud is a viable option in some cases particularly where low latency interconnects or very high I/O bandwidth are not a requirement. Costs are coming down steadily although are not yet at a level to be competitive with a centralised HPC service of appropriate size to ensure a steady and continuous throughput of batch jobs. Hence, in the future a hybrid scheme of utilising public cloud for regular, less demanding HPC applications coupled with dedicated private resources to accommodate novel architectures and specific requirements will likely emerge.

What these specific requirements are will vary widely and includes examples such as the hosting of very large or highly sensitive datasets or the use of new, experimental hardware which is not fully production ready and requires manual intervention from time to time. Oftentimes, this hardware will have specific hosting requirements in terms of proprietary non-standard racks or the need for direct water cooling. ICHEC is not alone in seeking hosting facilities which are cost effective and flexible enough to accommodate these diverse requirements and a case can be made for a facility shared across public sector agencies which could meet the different demands of research and non-standard IT infrastructure.

Niall Wilson

Infrastructure Manager

Irish Centre for High End Computing

Web: www.ichec.ie

Tel: +353 1 524 1608

Email: niall.wilson@ichec.ie