Digital government in action: RPA in shared services

It is important to recognise that the positive impact of shared services to the Civil Service goes well beyond the documented cost savings that it has delivered already. Hilary Murphy-Fagan, CEO of the National Shared Services Office (NSSO) writes.

The National Shared Services Office is the shared services provider for the Irish Civil Service. It provides HR and pension administration services to 38,200 civil servants, and payroll and related services to 145,000 public servants, of whom over 60,000 have retired. A government office, the NSSO is a service partner to all government departments and a range of public service bodies.

Now, for the first time, the Civil Service is effectively operating a single set of HR and pension processes, guided by the same set of policies. This creates the opportunity for modernisation and easy online user access through self-service and digital services to professional HR and payroll services, as well as a transparent and fair system which is a benefit to the service users.

HR data analytics are now readily available to management on a Civil Service-wide basis and the scale and specialist expertise of the NSSO allows reforms, such as the recent PAYE modernisation, to be implemented across the Civil Service efficiently.

At a strategic level, insights gained through shared services are helping to inform pay talks, the development of government policies, including absence, leave and mobility, and are critical to the delivery of the Civil Service People Strategy and the new HR operating model. The NSSO is a key partner in developing and delivering these strategic initiatives.

Innovation has been at the heart of the NSSO since its inception and nowhere is this more evident than in the strides that it has taken in deploying Robotic Process Automation (RPA) technology to enhance service delivery.

RPA origins

Robotic Process Automation (RPA) technology was first introduced in the NSSO in 2017, when a proof of concept trial was established to explore its potential to improve operational efficiency. The scale and extent of the HR shared services operation offered ‘prima facie’ evidence of ample opportunity for automation, with a consequential release of thousands of hours back to the business. This resulted in the release of staff from routine and monotonous tasks, allowing them to focus on value adding activities such as continuous improvement initiatives.

A pilot programme explored the technology for a limited period, to determine if business value could be realised from RPA within the context of HR shared services. The potential benefits identified for the NSSO included:

• increased speed of operations, eliminating backlogs;

• improvements in compliance, quality and operational agility; and

• improved employee morale and strengthen employee engagement.

A project team was established, comprehensive training was provided, and full support and mentoring services were facilitated through the Department of Public Expenditure and Reform. The pilot programme was successful and delivered a number of significant wins to the organisation, and an RPA Centre of Excellence model was established within the NSSO.

In the early stages, the focus has primarily been on the automation of processes within HR shared services. More recently, RPA processes have also been successfully deployed within payroll shared services, and this is anticipated to grow substantially in 2020. The NSSO is also deploying RPA to add value to cross-division initiatives.

Lessons Learned

While the NSSO is at an early stage of maturity, it is continuously exploring and delivering optimum efficiencies through deeper application of RPA technology. There are a number of lessons learned from the journey so far.

• Ensure the proposed process identified for RPA is suited to automation, is a structured process, is not in flux or has not been recently changed, i.e. that the process has been tried and tested and has been proven to work.

• Do not start with highly complex processes. Start with smaller processes or automate part of a bigger process. This offers the team a good opportunity for learning and acclimatisation, it helps win the trust of the organisation and mitigates the associated risks.

• Do not ‘let perfect be the enemy of good’. If the process is structured and works well it is a candidate, don’t waste time looking for the perfect process to automate.

• Do not allow scope creep. Define your processes tightly and deliver the automation, add embellishments in subsequent phases.

• Do not try to fix a process through automation, this is a recipe for disaster. Only automate processes that have been well tried and tested. If there are deficiencies in the process, fix them first.

• In the early stages it is important to get the processes out there and visible to win the support and trust of both management and of clients.

• Set realistic time frames. Be cautious at first, do not be afraid to make mistakes, learn from them, mitigate risks.

• Proactively engage with your subject matter experts, build trust and relationships.

• The relevant operations team must agree to release resources/staff to work with the RPA team to validate and test the RPA.

• The operations team must retain their skill set and process knowledge, in case the robot cannot run.

• As proficiency builds and more robots are deployed, there is a need for strong governance with robust decision making on priority and pipelines to manage the demand.

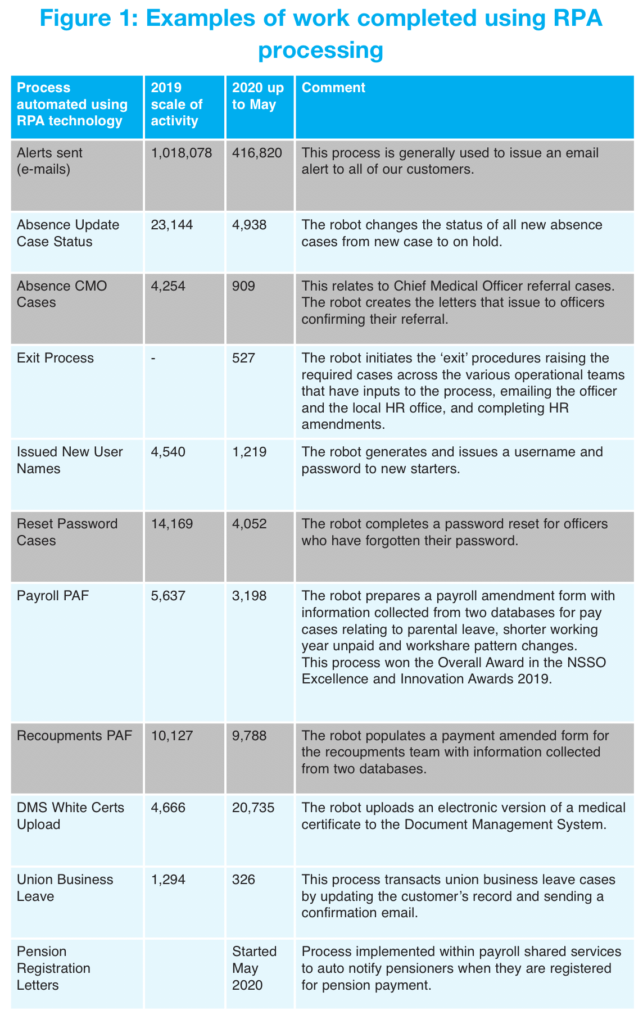

The NSSO has implemented a significant number of robotic process automations, (some of these are included in figure 1), the impact of which has been to release significant capacity back to the organisation.

Robotic Process Automation has become an invaluable digital workforce support in the NSSO. We have conservatively estimated that RPA activity in the NSSO is the equivalent value of 14 FTEs (full time equivalents), in 2019. RPA has been instrumental in our growth and development, given the scale and demands of our shared services operations. The NSSO will continue to invest in RPA technology to reap the rewards and opportunity the technology presents, to better enable our people so that we can better serve our customers’ needs.

John Ryan, Assistant Secretary for Employee Services, NSSO, says: “Each of our operations teams in HR shared services has experienced first-hand the potential of RPA and they readily embrace the technology as a means of removing the more monotonous tasks from their work day. We currently have a waiting list of processes to be automated. The fact that demand is outstripping supply internally is evidence of how RPA has been embraced within the NSSO. This has been invaluable as it has permitted the organisation to have scalability and keep pace with a growing demand that has to be serviced from a static resource complement.”

Looking to the future, we will continue to build our RPA expertise and grow our capacity and capability to roll out the functionality across all divisions within the NSSO.